Artificial intelligence systems are no longer experimental tools used only by researchers. They are now deeply embedded in cybersecurity, data protection, identity management, and enterprise compliance workflows. As AI adoption grows, so does the need for clear, measurable, and trustworthy standards that ensure these systems operate safely, ethically, and effectively. This is where the Keeper AI Standards Test becomes critically important.

The Keeper AI Standards Test is designed to evaluate whether AI-driven systems, particularly those used in security, password management, and enterprise data protection, meet strict benchmarks for reliability, transparency, compliance, and risk management. For organizations, developers, and security professionals, understanding this test is not optional. It is a foundational step toward building AI systems that align with modern regulatory expectations and real-world security demands.

Understanding the Keeper AI Standards Test

The Keeper AI Standards Test is an evaluation tool designed to assess the quality, reliability, and ethical standards of AI systems. It ensures that Artificial Intelligence (AI) technologies operate effectively, comply with regulations, and adhere to ethical guidelines, minimizing risks such as bias and ensuring safety. This test is crucial for maintaining public trust in AI by providing continuous monitoring and feedback for improvement, thus fostering innovation while upholding industry standards.

The Keeper AI Standards Test is a structured evaluation framework used to assess the quality, safety, and governance of AI-powered systems. While the test is closely associated with AI solutions used in cybersecurity and digital asset protection, its principles apply broadly across enterprise AI deployments. At its core, the test measures whether an AI system behaves in a predictable, secure, and compliant manner under real-world conditions.

It focuses not only on technical performance but also on ethical design, data handling practices, and operational accountability. Unlike basic functional testing, the Keeper AI Standards Test evaluates how an AI system makes decisions, how it handles sensitive data, and how it responds to edge cases such as incomplete information, unexpected inputs, or security threats. This makes it particularly relevant in environments where AI decisions directly impact user safety or organizational risk.

Why AI Standards Testing Is Essential Today

AI systems are increasingly responsible for decisions that were once made by humans. In cybersecurity, AI may decide whether to flag a login attempt as suspicious, revoke access to sensitive systems, or trigger automated responses. These decisions must be accurate, explainable, and free from hidden bias.

Without standardized testing, AI systems can introduce serious risks. Poorly trained models may generate false positives, lock out legitimate users, or fail to detect real threats. Even worse, they may violate privacy laws or expose sensitive data due to flawed design assumptions.

The Keeper AI Standards Test exists to reduce these risks by providing a consistent and objective method for evaluating AI readiness. It helps organizations demonstrate that their AI systems are not only innovative but also responsible and trustworthy.

Core Objectives of the Keeper AI Standards Test

The test is built around several key objectives that align with modern enterprise and regulatory expectations:

Security Resilience

First, it ensures security resilience. AI systems must resist manipulation, data poisoning, and adversarial attacks. The test examines whether the model can maintain accuracy and stability even when exposed to malicious inputs.

Data Governance

Second, it evaluates data governance. This includes how training data is sourced, stored, processed, and protected. The test verifies that AI systems follow privacy-by-design principles and comply with relevant data protection regulations.

Decision Transparency

Third, it measures decision transparency. AI outputs must be explainable to security teams and auditors. The test checks whether system decisions can be traced back to understandable logic rather than being treated as unexplainable black boxes.

Operational Reliability

Finally, it assesses operational reliability. AI systems must perform consistently across environments, workloads, and usage scenarios. The test ensures that results are reproducible and not overly sensitive to minor changes in input data.

Key Components Evaluated in the Keeper AI Standards Test

AI Model Integrity and Performance

One of the most critical aspects of the Keeper AI Standards Test is verifying that the AI model performs reliably under realistic conditions. This includes testing accuracy, precision, recall, and consistency across datasets. Rather than focusing on ideal laboratory conditions, the test emphasizes real-world usage scenarios.

Models are evaluated against noisy data, incomplete inputs, and unexpected patterns to ensure they can handle the complexity of live environments. Performance testing also includes monitoring model drift over time. AI systems that degrade in accuracy without proper retraining can pose serious risks, especially in security-sensitive applications.

Data Privacy and Compliance

Data is the foundation of any AI system, and mishandling it can lead to severe legal and reputational consequences. The Keeper AI Standards Test examines how data is collected, anonymized, stored, and accessed. Special attention is given to personally identifiable information and sensitive enterprise data.

The test ensures that AI systems do not retain unnecessary data, expose training datasets, or violate regional privacy regulations such as GDPR or similar frameworks. Compliance is not treated as a checkbox exercise. Instead, the test evaluates whether privacy principles are embedded into the system’s design and daily operation.

Ethical AI and Bias Mitigation

Bias in AI systems can lead to unfair outcomes, particularly in access control and identity verification systems. The Keeper AI Standards Test includes assessments to detect and mitigate algorithmic bias. This involves analyzing training datasets for imbalance and testing outputs across different user profiles.

The goal is not to eliminate all bias, which is often unrealistic, but to ensure that bias is identified, documented, and actively managed. Ethical AI practices are increasingly important for organizations seeking long-term trust from users and regulators. Passing this part of the test demonstrates a proactive approach to responsible AI development.

Explainability and Auditability

In high-stakes environments, organizations must be able to explain why an AI system made a particular decision. The Keeper AI Standards Test evaluates whether decisions can be audited and understood by human reviewers. This includes reviewing model documentation, decision logs, and system outputs.

Explainability is particularly important during security incidents, compliance audits, or legal investigations. AI systems that fail to provide clear explanations often struggle to meet enterprise governance standards, regardless of how accurate they appear.

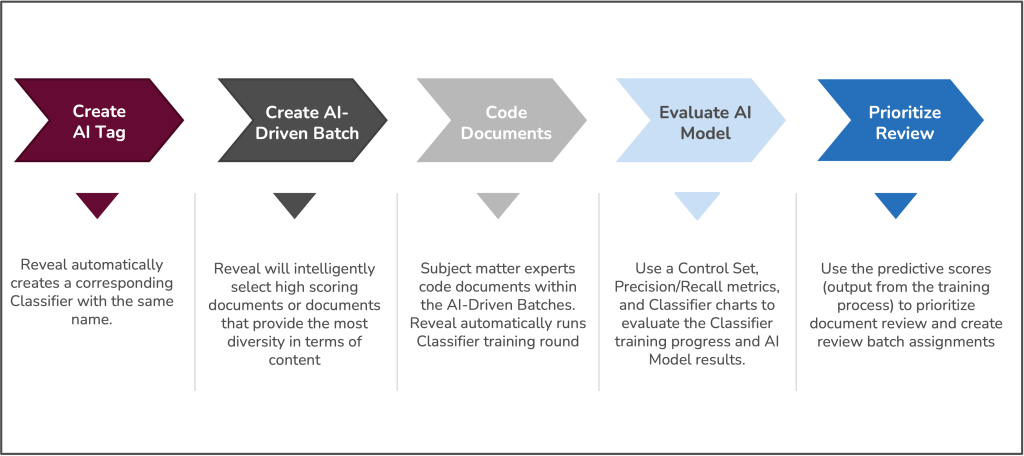

How the Keeper AI Standards Test Is Conducted

The testing process typically follows a structured and repeatable methodology. While exact implementation details may vary, the overall flow remains consistent.

System documentation review: Testers analyze architectural diagrams, data flow maps, and AI model descriptions to understand how the system is designed.

Controlled environment testing: The AI system is evaluated using standardized datasets and predefined scenarios to establish baseline performance metrics.

Stress and edge-case testing: The system is exposed to unusual inputs, adversarial data, and simulated attack scenarios to assess its resilience.

Reporting and recommendations: Test results are documented in detail, highlighting strengths, weaknesses, and areas for improvement.

Ethical Considerations in Keeper AI Standards Test

Why Ethics Matter in Keeper AI Standards Test

Ethics in AI are vital to prevent misuse and ensure positive outcomes. Ethical AI respects user privacy, avoids biases, and makes decisions that are fair and transparent. This is essential for maintaining public trust and achieving positive societal impacts.

Key Ethical Principles

Some key ethical principles guide the responsible use of AI systems. Fairness ensures that AI does not discriminate against any individual or group, while transparency focuses on making the system’s decision-making process understandable to users. Accountability emphasizes that developers and operators must take responsibility for how AI systems behave and are used. Privacy is equally important, as it involves protecting user data from unauthorized access and preventing misuse.

Technical Requirements for Keeper AI Standards Test

Hardware Specifications

The Keeper AI Standards Test assesses whether the AI system’s hardware meets the necessary requirements for optimal performance, including sufficient processing power, memory capacity, and connectivity to handle large datasets and complex computations.

Software Capabilities

Software capabilities are evaluated to ensure the AI system can efficiently process data, learn from it, and make accurate decisions. This includes assessing the algorithms used, the quality of the training data, and the overall software architecture.

Performance Metrics Evaluated for Keeper AI Standards Test

Accuracy

Accuracy is crucial for the reliability of AI systems. The Keeper AI Standards Test measures how precisely the AI performs its tasks, such as recognizing patterns, making predictions, or providing recommendations. High accuracy is essential for applications like medical diagnosis or financial forecasting.

Speed

Speed is important for real-time applications. The test evaluates how quickly the AI system processes information and responds to inputs. Fast response times are critical in scenarios like autonomous driving or emergency response systems.

Reliability

Reliability ensures that the AI system performs consistently under different conditions and over time. The test assesses the system’s ability to maintain performance levels despite changes in the operating environment or data inputs.

Benefits of Keeper AI Standards Test in Different Sectors

Implementing AI standards brings many benefits, such as:

- Improved Service Quality: Ensuring AI systems provide high-quality and reliable services.

- Risk Reduction: Minimizing the risk of errors and biases in AI decision-making processes.

- Increased Trust: Building user confidence in AI technologies through demonstrated adherence to standards.

Challenges in Keeper AI Standards Test

Implementing AI standards can be challenging due to:

- Rapid Technological Advances: Keeping standards up-to-date with fast-evolving AI technologies.

- Integration Challenges: Integrating standards into existing workflows and systems.

- Global Compliance: Ensuring standards are applicable and enforced across different regions and regulatory environments.

Common Challenges and How to Overcome Them

One common challenge is insufficient documentation. Many AI systems evolve rapidly, and documentation often lags behind development. Addressing this requires disciplined version control and clear ownership of documentation tasks. Another challenge is data quality. AI models trained on outdated or biased data may struggle to meet standards.

Regular dataset reviews and retraining schedules help mitigate this issue. Explainability can also be difficult, especially with complex models. Using interpretable models where possible, or adding explanation layers to existing models, can significantly improve outcomes.

Long-Term Benefits of Passing the Keeper AI Standards Test

Passing the Keeper AI Standards Test offers more than immediate validation. It builds long-term trust with users, partners, and regulators. Organizations that meet these standards are better positioned to scale their AI deployments responsibly. They also gain a competitive advantage by demonstrating commitment to security and ethical AI practices. Over time, the discipline required to meet these standards often leads to better engineering practices, clearer governance structures, and more resilient systems overall.

The Future of AI Standards and Keeper’s Role

Trends and Predictions

Future developments in AI are expected to place a stronger emphasis on ethical considerations, ensuring that systems remain fair, transparent, and trustworthy. At the same time, the integration of advanced technologies such as quantum computing is likely to enhance AI capabilities and processing power. Additionally, evolving standards will support more personalized and user-centric AI experiences, allowing systems to adapt more closely to individual user needs and preferences.

Emerging Technologies

Technologies such as blockchain and the Internet of Things (IoT) are expected to play a significant role in shaping future AI standards. These technologies can enhance transparency, security, and efficiency in AI systems.

Conclusion

The Keeper AI Standards Test is not just a technical assessment; it is a comprehensive framework for building trust in AI systems. By evaluating performance, security, privacy, ethics, and explainability, it ensures that AI solutions are ready for real-world deployment. For organizations serious about AI-driven security and enterprise reliability, understanding and preparing for this test is a strategic necessity. It encourages better design, stronger governance, and more responsible innovation. Ultimately, the value of the Keeper AI Standards Test lies in its ability to transform AI from a powerful but opaque tool into a transparent, reliable, and trustworthy asset.

Frequently Asked Questions (FAQs)

What is the Keeper AI Standards Test used for?

The Keeper AI Standards Test is used to evaluate the security, reliability, compliance, and ethical design of AI-powered systems, especially in enterprise and cybersecurity environments.

Who should prepare for the Keeper AI Standards Test?

AI developers, enterprise IT teams, security professionals, and compliance officers should all be involved in preparing for the test to ensure system-wide readiness.

Does the test focus only on technical performance?

No, it also evaluates data privacy, explainability, bias mitigation, and operational governance, making it a holistic AI assessment framework.

How long does it take to prepare for the test?

Preparation time varies, but organizations typically need several weeks to months, depending on system complexity and documentation maturity.

Is passing the Keeper AI Standards Test mandatory?

While not always legally mandatory, passing the test is increasingly important for trust, compliance, and long-term AI deployment success.